There is no single moment when choreography truly “comes to life.” The art of making dance is its own craft–it’s not simply thinking of a movement and teaching it to a dancer but the creation of a world. It certainly involves technique, but that’s only one element of many; there’s also the quality of movement, the tempo, the spacing, the narrative, and the music, to name but a few.

In my experience, a dance comes to life over the course of long rehearsal and preparation. At the moment when the performance begins, this thing that had been in my head now exists, now lives in physical, three-dimensional space. It feels magical.

That’s why, when I began investigating the marriage of choreography and artificial intelligence, it made me a little uneasy. Computers live in virtual space; dance is visceral. But the truth is that the biggest problem with choreography and AI is something that choreographers themselves have been struggling with for as long as the art has been around: notation.

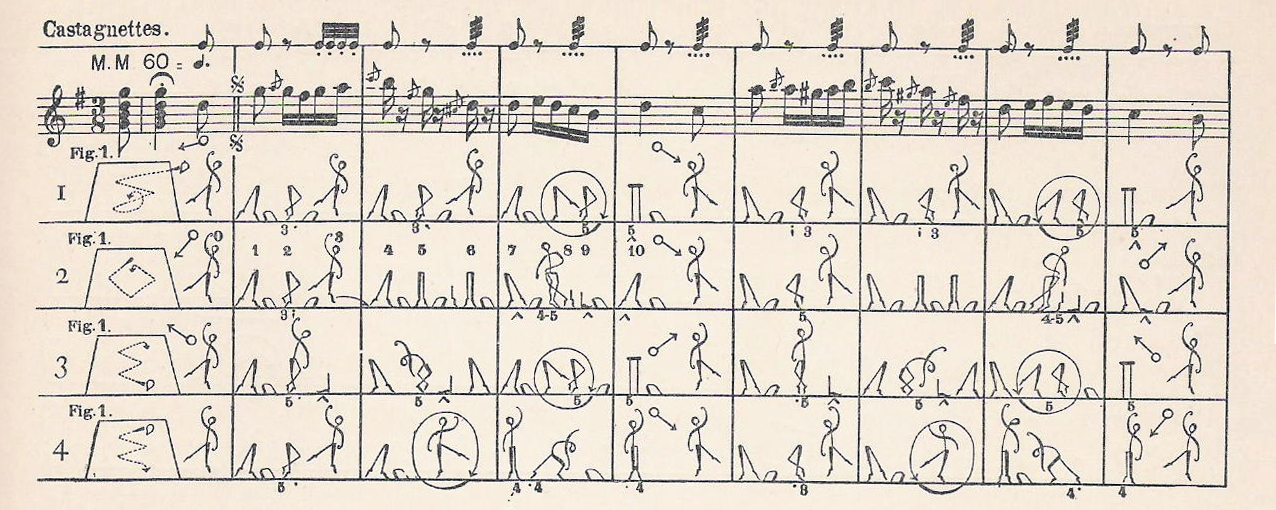

How do you write a dance? Even if you could describe the hundreds, even thousands, of variations of movement in words, you’d still need to describe the quality of the movement, the tempo, the dynamic, the underlying emotion and intention, and the spacing and relation to the other dancers. Even for a computer, it’s nearly impossible to document every detail.

That’s not to say that people haven’t tried. There are a handful of algorithmic systems that were built specifically to choreograph, or at least attempt it. Probably the first choreographer to start experimenting with computers was Merce Cunningham, one of the most significant figures in contemporary Western dance.

Cunningham’s style, which focused primarily on the architecture and shape of the dance, was well suited to the dispassionate nature of a computer. He worked with developers on a system called LifeForms in the late 1980s, a program that generated movement possibilities unbound by the usual limits of gravity and anatomy. It was intended as a creation tool for choreographers and influenced Cunningham’s late work.

While LifeForms is still used today, English choreographer Wayne McGregor and the Open Ended Group took its basic principle and developed it further into what’s known as the Choreographic Language Agent.

Through an insanely complex algorithm, the CLA takes a language phrase and turns it into a geometric shape that follows a certain animation. The dancer then uses this animated geometric shape as a tool for improvisation and creation.

McGregor’s most well-known work created with the CLA’s help was his 2013 piece “Atomos.” Watching excerpts on YouTube, however, I’m not sure I would be able to tell that a computer contributed to the choreography. It looks just like a great deal of the human-created choreography I see in contemporary dance. I’m not sure if that means it was successful or not.

These kind of movement generators are not the only way people have tried to teach a computer to create dance, however. All the way back in the Stone Age (1995), James Bradford and Paulette Côté-Laurence created an AI system called CorX (Choreographic Expert), which used an algorithm with a series of rules and probabilities just like those employed in chatbots. For example, if X movement happened, then Y followed with a 50 percent probability. The program then used these rules to generate an actual dance script.

While it was never developed much past a very basic stage, CorX’s original concept is quite advanced. It was the only system I found that could deal with multiple dancers, with the same if/then rules and probability system applying to what the dancers do in relation to each other on any given beat, including spatial patterns and tempo.

Some AI dance systems don’t even require human dancers. In 2010, Mária VirÄíková and Peter SinÄák from the Department of Cybernetics and AI at the Technical University of KoÅ¡ice in Slovakia built a system to develop choreography for the Nao humanoid robot. Although this was closer to a traditional piece of software than actual AI, the program was able to learn from feedback and develop accordingly. After a few training sessions, it could develop new movements similar to those that it had received positive feedback for.

During testing, a basic movement sequence was evaluated and developed using this process. The “winning” sequence, following feedback, was then programmed into three Nao robots, which offered the following rather adorable display. Still, I’m not sure I’d call this a “dance performance.” It’s more of a technological performance. Dance, you see, is more than just the movement.

Try this: Take your right hand, palm up, fingers slightly stretched but relaxed. Now bring it up to the left side of your chest. As your hand touches and presses against your chest, let your shoulders hunch a little, as though the pressure of your hand pushes your chest inwards.

In doing this, suddenly a simple movement carries an emotional weight. It’s more than the shape: It’s a story, a narrative, and that is where a dance comes alive.

At the end of the day, like with many other attempts at getting computers to be creative, none of these programs can stand alone. At best, they are improvisational tools, like any other improvisational game that choreographers play. They require a human actor to make certain decisions, and of course, a human dancer to execute.

In many ways, this is not so different from the human-creation process. After all, the choreographer also requires a dancer to execute their ideas, and the interplay of the creative system between the choreographer and dancer is never as simple as “the choreographer creates, the dancer dances.”

If we want to make a computer that can create a dance on its own, there are so many variables to consider. Currently, software only focuses on the architecture of dance. Perhaps if that focus shifted to the narrative and emotional aspects of the art, a computer might come closer to something more impressive.

In my experience, certain movements and movement qualities naturally embody different emotions. Perhaps the AI could learn these associations and expand on them; then, using a certain emotional structure–for example, happy to sad and back to happy–it could suggest a movement sequence.

Ultimately, though, while this could be a better way of creating something beyond just embodied architecture, a computer will never sit in the back of a theatre and experience the cocktail of sheer terror and excitement that comes with watching your own dance being performed onstage.

In the coming years, artificial intelligence may serve as a familiar tool for choreographers, a valuable source of new material and ideas. But in my opinion, the act of creation and embodiment in itself–that little spark of magic–will always remain human.

How We Get To Next was a magazine that explored the future of science, technology, and culture from 2014 to 2019. This article is part of our Talking With Bots section, which asks: What does it mean now that our technology is now smart enough to hold a conversation? Click the logo to read more.